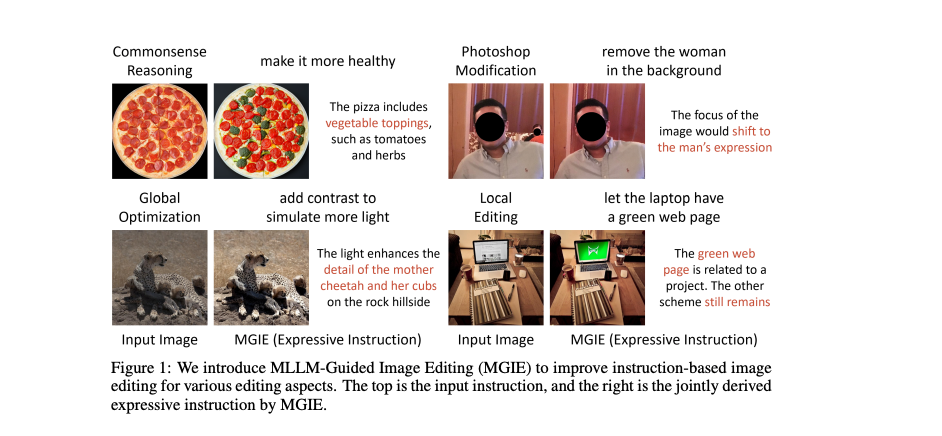

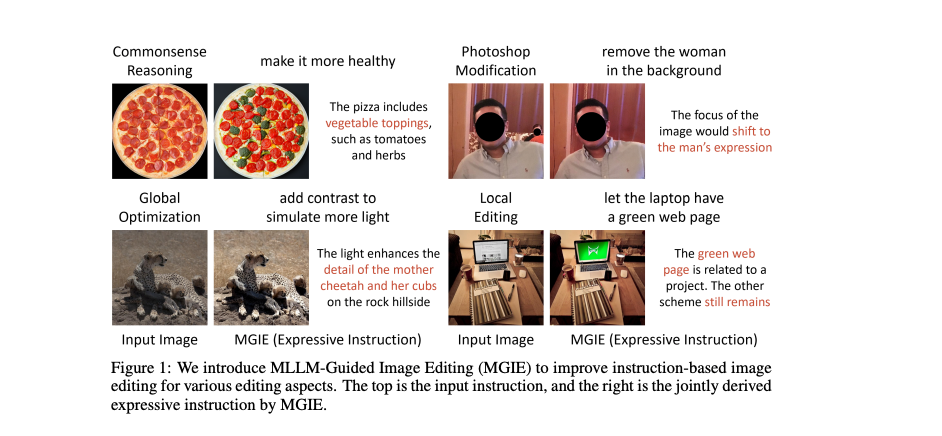

Apple has introduced a groundbreaking open-source AI model named “MGIE,” short for MLLM-Guided Image Editing. This innovative technology enables image manipulation through natural language commands, marking a significant leap forward in the realm of visual content creation.

The model can handle various editing aspects, such as Photoshop-style modification, global photo optimization, and local editing.

How does MGIE work?

At its core, MGIE harnesses the power of multimodal large language models (MLLMs) to comprehend user instructions and execute pixel-level alterations.

Developed in collaboration with researchers from the University of California, Santa Barbara, MGIE represents a fusion of cutting-edge AI and image editing expertise, showcased in a paper presented at the prestigious International Conference on Learning Representations (ICLR) 2024.

MGIE is based on the idea of using MLLMs, which are powerful AI models that can process both text and images, to enhance instruction-based image editing.

MLLMs have shown remarkable capabilities in cross-modal understanding and visual-aware response generation, but they have not been widely applied to image editing tasks.

MGIE integrates MLLMs into the image editing process in two ways: First, it uses MLLMs to derive expressive instructions from user input. These instructions are concise and clear and provide explicit guidance for the editing process.

For example, given the input “make the sky more blue”, MGIE can produce the instruction “increase the saturation of the sky region by 20%.”

Second, it uses MLLMs to generate visual imagination, a latent representation of the desired edit. This representation captures the essence of the edit and can be used to guide the pixel-level manipulation. MGIE uses a novel end-to-end training scheme that jointly optimizes the instruction derivation, visual imagination, and image editing modules.

What can MGIE do?

MGIE can handle a wide range of editing scenarios, from simple color adjustments to complex object manipulations. The model can also perform global and local edits, depending on the user’s preference. Some of the features and capabilities of MGIE are:

- Expressive instruction-based editing: Can produce concise and clear instructions that guide the editing process effectively. This not only improves the quality of the edits but also enhances the overall user experience.

- Photoshop-style modification: Can perform common Photoshop-style edits, such as cropping, resizing, rotating, flipping, and adding filters. The model can also apply more advanced edits, such as changing the background, adding or removing objects, and blending images.

- Global photo optimization: Can optimize the overall quality of a photo, such as brightness, contrast, sharpness, and color balance. The model can also apply artistic effects like sketching, painting and cartooning.

- Local editing: Can edit specific regions or objects in an image, such as faces, eyes, hair, clothes, and accessories. The model can also modify the attributes of these regions or objects, such as shape, size, color, texture and style.

How to use MGIE?

MGIE is available as an open-source project on GitHub, where users can find the code, data, and pre-trained models. The project also provides a demo notebook that shows how to use MGIE for various editing tasks.

You can also try out Apple-MGIE online through a web demo hosted on Hugging Face Spaces, a platform for sharing and collaborating on machine learning (ML) projects.

It is designed to be easy to use and flexible to customize. Users can provide natural language instructions to edit images, and MGIE will generate the edited images along with the derived instructions. Users can also provide feedback to it to refine the edits or request different edits. It can also be integrated with other applications or platforms that require image editing functionality.

Why is MGIE so important?

Its introduction marks a significant milestone in instruction-based image editing, showcasing the transformative potential of MLLMs in creative endeavors.

Beyond its research implications, it serves as a practical tool for diverse applications, empowering users to unleash their creativity across domains like social media, e-commerce, and entertainment.

For Apple, MGIE underscores the company’s commitment to advancing AI research and development, solidifying its position as a leader in consumer technology innovation.

While MGIE represents a remarkable achievement, ongoing advancements in multimodal AI systems promise further breakthroughs, ushering in a new era of assistive creativity.

MGIE is a breakthrough in the field of instruction-based image editing, which is a challenging and important task for both AI and human creativity.

It demonstrates the potential of using MLLMs to enhance image editing and opens up new possibilities for cross-modal interaction and communication.

It is not only a research achievement, but also a practical and useful tool for various scenarios. MGIE can help users create, modify, and optimize images for personal or professional purposes, such as social media, e-commerce, education, entertainment, and art. MGIE can also empower users to express their ideas and emotions through images and inspire them to explore their creativity.

For Apple, MGIE also highlights the company’s growing prowess in AI research and development. The tech giant has rapidly expanded its machine learning capabilities in recent years, with MGIE being perhaps its most impressive demonstration yet of how AI can enhance everyday creative tasks.

While MGIE represents a major breakthrough, experts say there is still plenty of work ahead to improve multimodal AI systems. But the pace of progress in this field is accelerating quickly. If the hype around MGIE’s release is any indication, this type of assistive AI may become an indispensable creative sidekick.