In the ever-evolving landscape of artificial intelligence, one of the most fascinating and impactful advancements is the convergence of text and image generation. This groundbreaking technology, known as Text-to-Image Diffusion, has opened new frontiers in creativity and problem-solving, blurring the lines between what is written and what can be visually depicted.

We introduce to you a groundbreaking initiative in this demanding domain…

Authors: Zhenzhen Weng, Jingyuan Liu, Hao Tan, Zhan Xu, Yang Zhou, Serena Yeung-Levy, Jimei Yang

Abstract

Diffusion models have exhibit exceptional performance in text-to-image generation and editing. However, existing methods often face challenges when handling complex text prompts that involve multiple objects with multiple attributes and relationships.

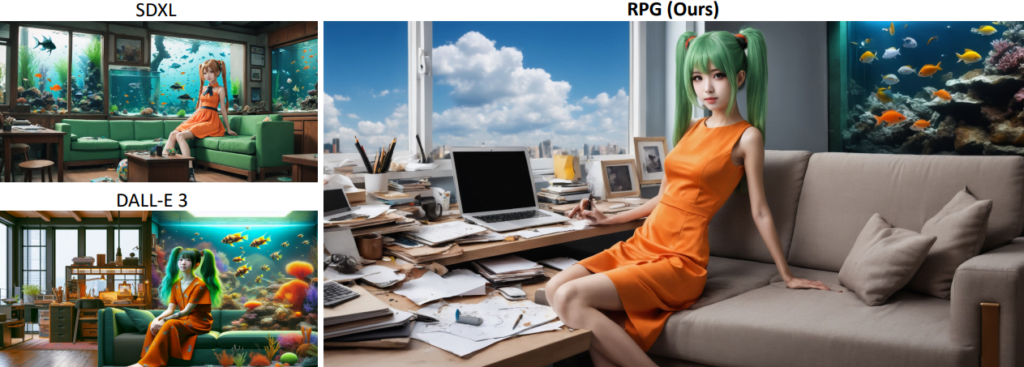

In this paper, the authors propose a brand new training-free text-to-image generation/editing framework, namely Recaption, Plan and Generate (RPG), harnessing the powerful chain-of-thought reasoning ability of multimodal LLMs to enhance the compositionality of text-to-image diffusion models.

The approach employs the MLLM as a global planner to decompose the process of generating complex images into multiple simpler generation tasks within subregions. They propose complementary regional diffusion to enable region-wise compositional generation. Furthermore, they integrate text-guided image generation and editing within the proposed RPG in a closed-loop fashion, thereby enhancing generalization ability.

Extensive experiments demonstrate that the RPG outperforms state-of-the-art text-to-image diffusion models, including DALL-E 3 and SDXL, particularly in multi-category object composition and text-image semantic alignment.

Notably, the RPG framework exhibits wide compatibility with various MLLM architectures (e.g., MiniGPT-4) and diffusion backbones (e.g., ControlNet).

The code is available at: https://github.com/YangLing0818/RPG-DiffusionMaster